“What gets measured gets managed.”

A neat little maxim—usually attributed to Peter Drucker. Except, he never said it.

The actual quote by V. F. Ridgway is longer, thornier, and a lot less comforting:

“What gets measured gets managed — even when it’s pointless to measure and manage it, and even if it harms the purpose of the organisation to do so.”

That’s content attribution in 2025 in a nutshell.

Because attribution, like the misattributed quote, is often simplified, misunderstood, and sometimes even counterproductive—optimizing for what’s easy to measure instead of what really matters.

And right now, measurement matters more than ever. According to the 2025 State of the CMO Report, 69% of CMOs say leadership now expects measurable results for everything they do—a jump from 59% just two years ago.

So, we surveyed 58 content teams and heard from the folks carrying the weight of attribution every day. Our respondents spanned experience levels from specialists to directors and VPs, working across companies from early-stage startups to established enterprises like Blackbaud.

The titles ranged from Senior Manager of Content Marketing at Loopio to Head of Marketing at PlaytestCloud, with specialists, managers, and directors from companies like Juro in between.

What's working? What's broken? And where are the biggest gaps between what leadership wants and what's actually possible?

Here’s what came back.

This first annual 2025 Content Attribution Report was created in partnership with our friends at Demand-Genius.

Most Marketing teams are stuck on the Content Treadmill. We churn out new content, and measure its impact on someone else’s algorithm more than our own pipeline. Demand-Genius helps you build a content strategy around impact, not output.

Start with a Free AI Content Audit to spot the trends, gaps and opportunities in your existing content library. In 10 minutes, we’ll then walk you through integrating with your stack to see how every single piece connects to revenue.

What is your North Star or primary goal as a content team?

Responses were almost evenly split across three buckets: traffic, MQLs, and pipeline, each drawing about a quarter of teams. Very few content teams said they’re measured directly on revenue (9%) or purely on leads (10%).

On the surface, the spread looks even. But that split is exactly the issue—there’s no shared definition of success.

Some teams are celebrating eyeballs, others are celebrating opportunities, while only 9% are measured directly on revenue. That disconnect makes attribution messy before the work even starts.

Read: Here’s How 6 SaaS Content Teams Track Attribution

Does your current reporting accurately capture your content's impact?

Most teams say their reporting only “sort of captures value.” Nearly half fell into that bucket, while only a small minority believes their reporting “mostly captures impact.”

This shows that while reporting mechanisms exist, they’re falling short. Teams are measuring impact, but when the largest group admits reporting is only partially accurate, that tells us attribution isn’t delivering enough to be trusted.

Do you trust the accuracy of your current attribution data?

The majority (71%) say their attribution data is only “sort of accurate but not the full picture.” Another 17% believe the data itself is flawed, and just 12% feel confident in what they’re working with.

The tools themselves aren’t broken. GA4, HubSpot, Amplitude all capture activity just fine. Activity is just one part of the picture, though. As many marketers move away from gated content, understanding how those engagements are impacting complex deal cycles makes evaluating content’s true impact on what matters—revenue metrics—harder than ever.

The frame, we think, is also partially to blame. First-click, last-click, 30-day vs. 60-day windows—all of them narrow the picture, and all of them undercount content’s influence.

This explains why the majority land in the “incomplete picture” camp.

So then, the attribution debate isn’t about whether the data is accurate—rather, it's about whether it’s enough. And until organizations align on what “content influence” actually means, attribution may very well continue to feel flawed by design.

Are you able to get this data yourself, or do you rely on a data person/team?

Most teams sit in the middle. Around a third said they use a mix—sometimes pulling data themselves, sometimes leaning on a dedicated data team. Smaller but equal groups said they either rely fully on a data person or can pull reports independently.

This middle ground slows content down. Out-of-the-box dashboards give quick reads, but anything beyond that gets punted to analysts. And the more hoops you jump through to get numbers, the less those numbers shape day-to-day decisions.

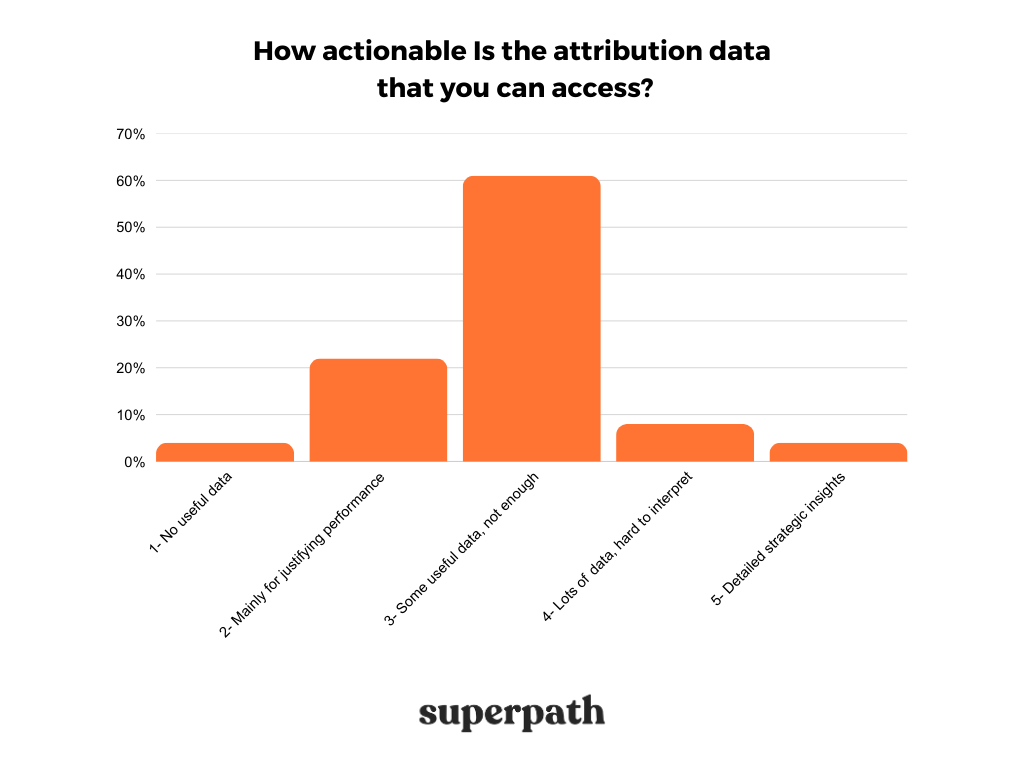

How actionable is the attribution data that you can access?

Most teams say they have “some useful data, but not enough.” A smaller group reports using data mainly for justification, while very few get detailed strategic insights they can act on.

This reflects a bigger theme: content attribution is stuck in the middle. At best, the numbers help defend existing work; at worst, they’re confusing or irrelevant.

The problem extends beyond just the mere volume of data. From our experience working as content marketers too, we reckon it’s clarity. Teams have dashboards full of metrics, but little that actually tells them what to do next.

Are your content and sales teams aligned?

Nearly half (48%) said content and sales “occasionally work together.” A smaller group (26%) works closely together, while 19% rarely interact. A minority operate without a sales team (5%, PLG-led orgs) or have essentially no contact (2%).

This middle-ground alignment isn’t really surprising. For most teams, content and sales aren’t in lockstep day-to-day—nor do they need to be. Occasional collaboration on enablement materials, case studies, or bottom-of-funnel content can be enough if there are good processes for sharing information.

Where things break down is when alignment depends on meetings alone. Teams that build in passive collaboration (e.g., reviewing Gong calls, sharing insights in Slack, monitoring objections in proposals) can support sales without constant syncs.

Rate your level of buy-in from company leadership.

Roughly a third of teams (>36%) say leadership fully supports content, while another 19% report partial buy-in. Only a small minority—about 5%—say their executives remain skeptical.

At first glance, that’s reassuring: content seems to have earned a seat at the table. But support without attribution is fragile. If buy-in is rooted in enthusiasm rather than hard data, it won’t hold when budgets tighten.

Does attribution end at signup? Can you track beyond this?

Over half (52%) said their tracking ends at conversion, while 28% can’t reliably track conversions at all. Just 21% are able to see beyond signup into retention, churn, or expansion.

This is what we might call the “conversion cliff.” Content’s impact on acquisition is measured—imperfectly—but its influence on retention, expansion, or lifetime value is rarely captured. That leaves a huge blind spot, especially as recurring revenue models depend on what happens after signup.

In practice, this means content teams can show how they drive signups, but not whether those signups become valuable customers.

Does the lack of clear attribution hold you back from budget allocation?

The majority said yes: better data would directly help them prove content’s value and unlock more resources. A smaller group (14%) said clearer attribution wouldn’t necessarily lead to more budget.

On one hand, this confirms what most content leaders feel instinctively: attribution isn’t just about reporting, it’s about resourcing. If you can’t prove your impact, you’ll struggle to make the case for more headcount, tools, or campaign spend.

But the minority response is telling. Some teams believe that even with stronger data, budgets won’t move. That points to a deeper challenge: in certain companies, the ceiling is perception, not evidence. Content may be seen as a cost center, and attribution alone won’t fix that.

Where are you not getting credit?

We asked this question to uncover the “halo” or “long-tail” effect of content: the places where teams feel their work drives impact but attribution frameworks fail to capture it.

In other words: where is content influencing pipeline and revenue, but not getting credit?

Across 35 open-ended responses, four themes stood out:

1. Social and brand activity disappear in the data.

Daily LinkedIn posts, influencer campaigns, YouTube brand ads, even founder-led thought leadership—all of these create visibility and credibility but rarely tie back to measurable conversions.

“oh, where do I begin 😉 essentially, anything that doesn't lead to a straightforward conversion within a measurable window is currently untrackable. impact of live events on Sales pipeline? unclear. impact of LinkedIn presence on MQLs? unclear. I could go on.”

2. Content with a long shelf life gets undervalued.

Case studies, research reports, and interactive tools often resurface in late-stage sales conversations. Prospects cite them directly in meetings or events months later, but those touchpoints never show up in dashboards.

“Long sales cycles and many sales conversations take months if not more. It can be tricky to attribute anything to revenue. E.g., we know that our case studies are valuable as our prospects tell us they resonate with their situation and even share feedback at events weeks/months after we sent them to them. But we don't if that actually translates to more purchase intent.”

3. Ungated assets and “dark social” leave big blind spots.

Blogs, videos, product tours, and resources shared via Slack, WhatsApp, or email circulate widely and influence buying decisions—but because they don’t generate form fills or appear in first-touch models, for attribution tools, they might as well not exist.

“Indirect conversions, multiple touchpoints, dark social sharing, brand recall… content initiatives improve intent, but the original source never gets credit.”

4. Re-engagement and expansion aren’t measured.

Content plays a role in reviving dormant leads, nurturing existing accounts, and accelerating upsells. But current systems largely track net-new conversions, leaving these moments of influence invisible.

“Generating new leads can be measured pretty effectively, but we often get dormant existing leads that we manage to re-engage with our content and there's no way in our setup to indicate we've "reactivated" these prospects.”

Most content teams are measuring enough to keep the lights on, not enough to change the room they’re in. So sure, dashboards get updated and reports get shared. But the real question—does this data move strategy, budget, or pipeline?—too often goes unanswered.

We think attribution is less failing, and more stalling. And stalled attribution leaves content leaders stuck defending line items instead of directing the agenda.

Attribution may never be perfect. But it doesn’t have to be. The challenge—and the opportunity—is to move from “sort of captures value” to “tells the full story.”

At a time when we’re seeing traditional content metrics fall out of favor, and AI draw budgets away, we believe that it’s content teams that need to lead the conversation on capturing and demonstrating the full impact that quality content creates.

Thank you to the 58 teams who shared their realities with us! You gave us a clearer view of what’s really happening—and where the work begins.